EC2 Public IP Address: 18.236.230.74 (yours will definitely be different).S3 Bucket Name: ca-s3fs-bucket (you’ll need to use your own unique bucket name).If you deviate from these values, ensure to correctly use the values that you set within your own environment: In this documented setup the following environmental settings have been used. So it seems that we’ve got all the pieces for an S3 FTP solution. S3FS-Fuse is a FUSE based file system that enables fully functional filesystems in a userspace program. On S3FS mounted files systems, we can simply use cp, mv, and ls – and all the basic Unix file management commands – to manage resources on locally attached disks. S3FS-Fuse will let us mount a bucket as a local filesystem with read/write access. This mean interactions occur at the application level via an API interface, meaning you can’t mount S3 directly within your operating system. That means block storage volumes that are attached directly to an machine running an operating system that drives your filesystem operations. SAN, iSCSI, and local disks are block storage devices. Using S3 FTP: object storage as filesystem

You might consider using the SSH File Transfer Protocol (sometimes called SFTP) for that. NOTE: FTP is not a secure protocol and should not be used to transfer sensitive data. Last but not least, you can always optimize Amazon S3’s performance.

With Amazon S3, there’s no limit to how much data you can store or when you can access it.You pay for exactly what you need with no minimum commitments or up-front fees.Amazon S3 is built to provide “ 99.99% availability of objects over a given year.Amazon S3 provides infrastructure that’s “ designed for durability of 99.999999999% of objects.Also, in case you missed it, AWS just announced some new Amazon S3 features during the last edition of re:Invent. Why S3 FTP?Īmazon S3 is reliable and accessible, that’s why.

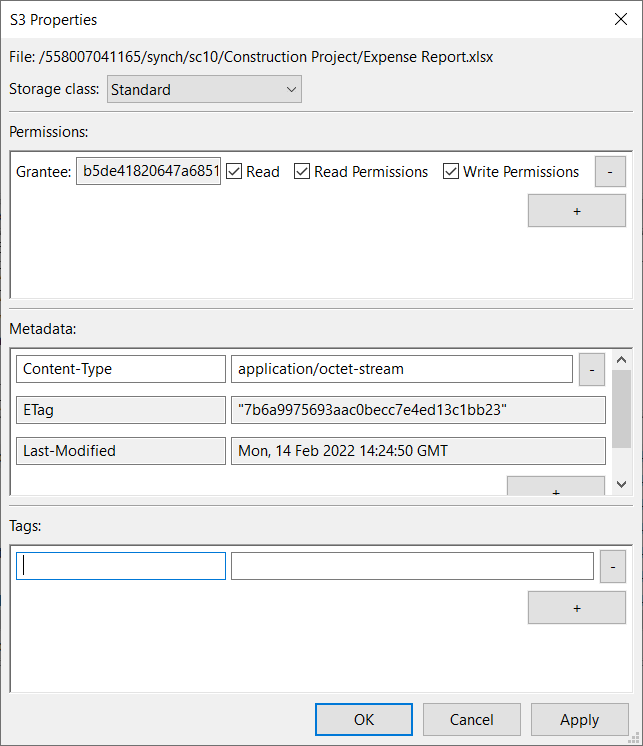

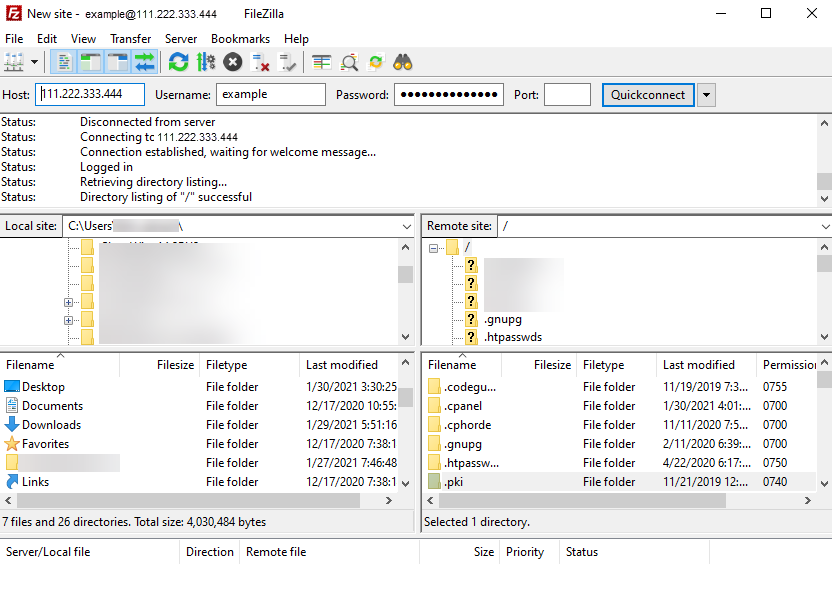

Updated 14/Aug/2019 – streamlined instructions and confirmed that they are still valid and work. However, using this kind of storage requires infrastructure support and can cost you a fair amount of time and money.Ĭould an S3 FTP solution work better? Since AWS’s reliable and competitively priced infrastructure is just sitting there waiting to be used, we were curious to see whether AWS can give us what we need without the administration headache. You might, at some point, have configured an FTP server and used block storage, NAS, or an SAN as your backend. Same /localmachine/directory/ your local machine directory.Is it possible to create an S3 FTP file backup/transfer solution, minimizing associated file storage and capacity planning administration headache?įTP (File Transfer Protocol) is a fast and convenient way to transfer large files over the Internet. sshdirectorypath will be SSH server directory from where you have to get files and folders and it will do all recursively. And FileZilla took more time than the terminal command. There is SCP the command is also available but if the internet interrupts you have to start again. While googling found good solutions to tackle this problem.Ĭonsidering you have access to the SSH server and S3 bucket AWS credentials. Later those files and folders have to move to the S3 bucket.

CONNECT TO S3 WITH FILEZILLA ZIP

Due to the limitation of storage cannot run the zip command to compress and download files.

Being legacy there was many files and folder was there and the legacy server was not having AWS access enabled. I was working on moving away from a legacy server to S3 bucket hosting for static file hosting. This blog going to explain the steps to move files or folders from the SSH server ( where AWS credentials are not configured ) to the AWS S3 bucket easily without missing any files or folders.

0 kommentar(er)

0 kommentar(er)